Interpretable AI

From book Interpretable AI

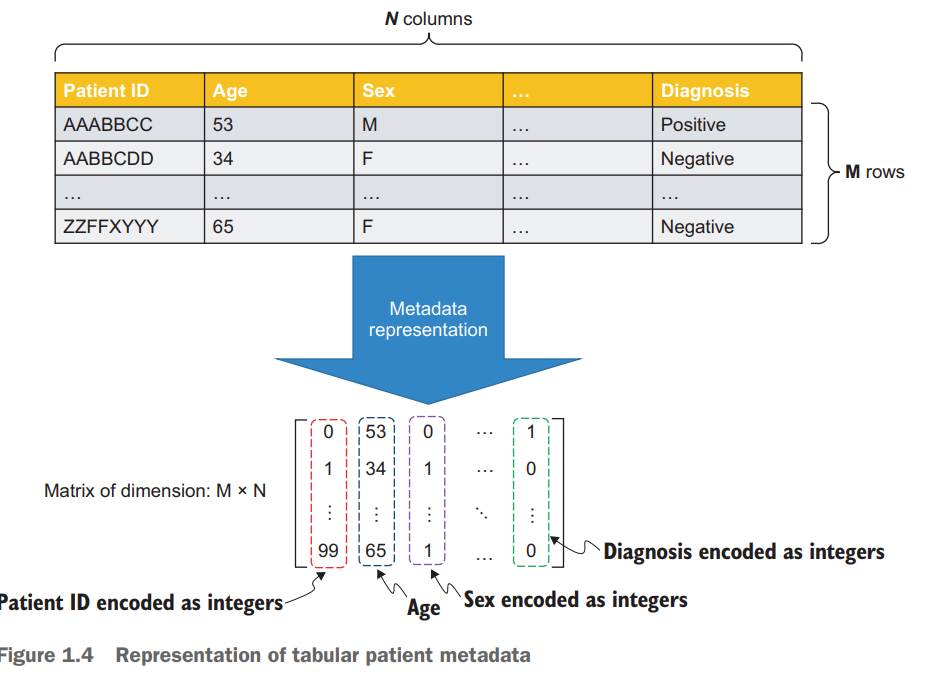

Data Representation

有监督和无监督

有监督:有标准tag,Regression Classification

无监督:如:聚类

增强学习:和环境交互,如扫地机器人,Go, Chess

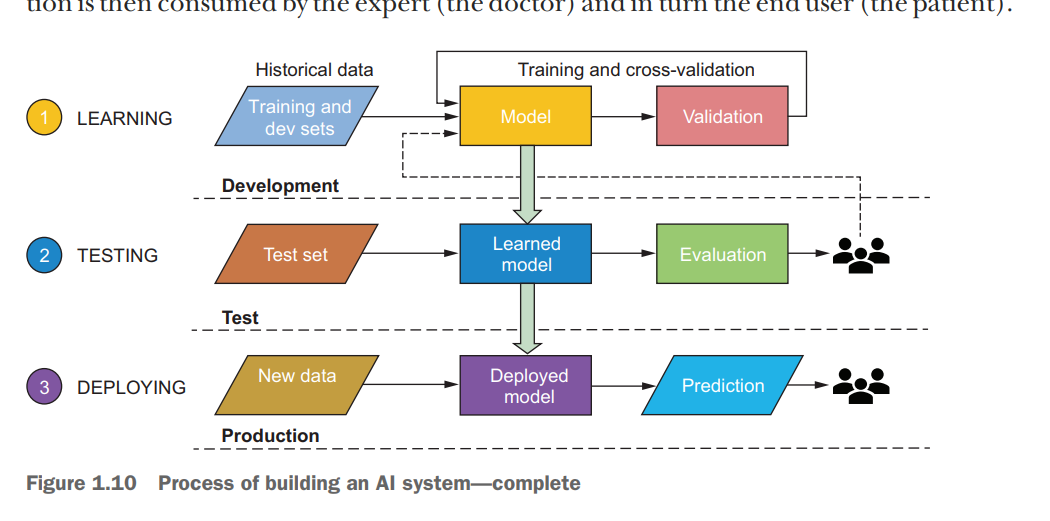

三个步骤:

Learning, Testing, Deploying

Data Leakage

比如医生诊断的信息,会增强测试集的性能,但会严重影响New Data的性能

Bias

采样存在偏差,只针对一小部分而不是全体情况

Regulatory

GDPR

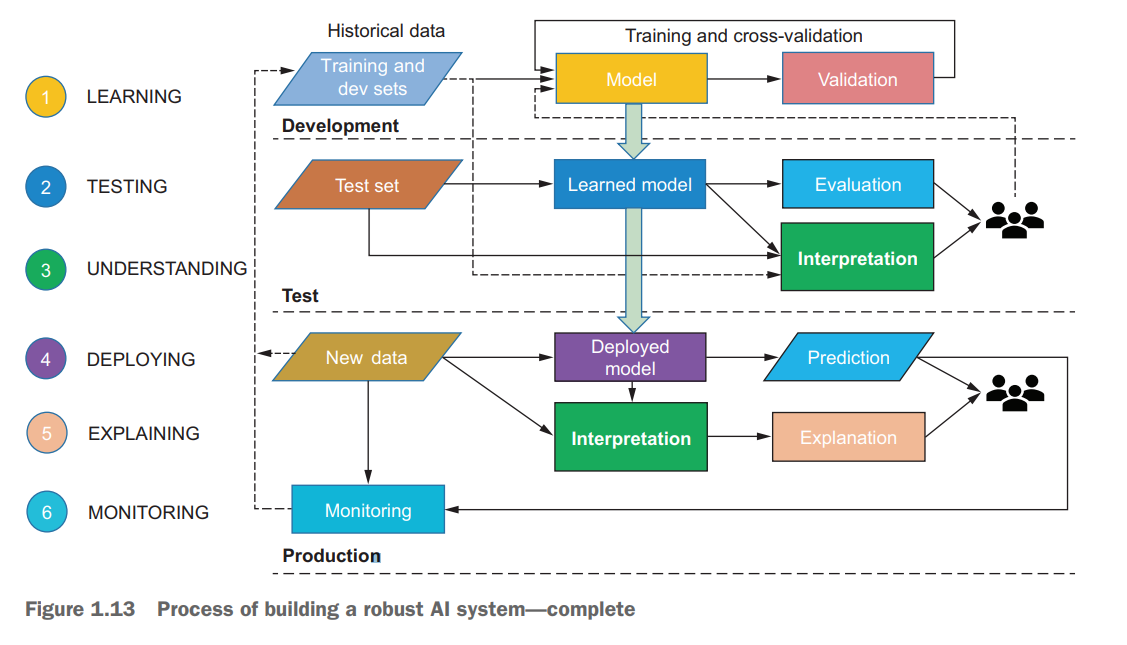

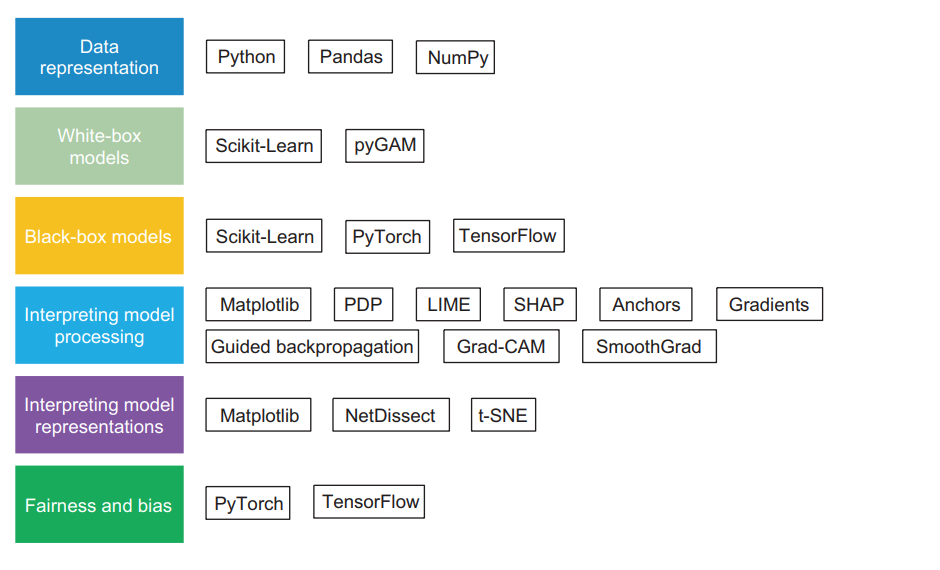

为了不让上述三个问题影响我们的model,引入Interpretation, Explanation和Monitoring

Interpretability vs Explainability

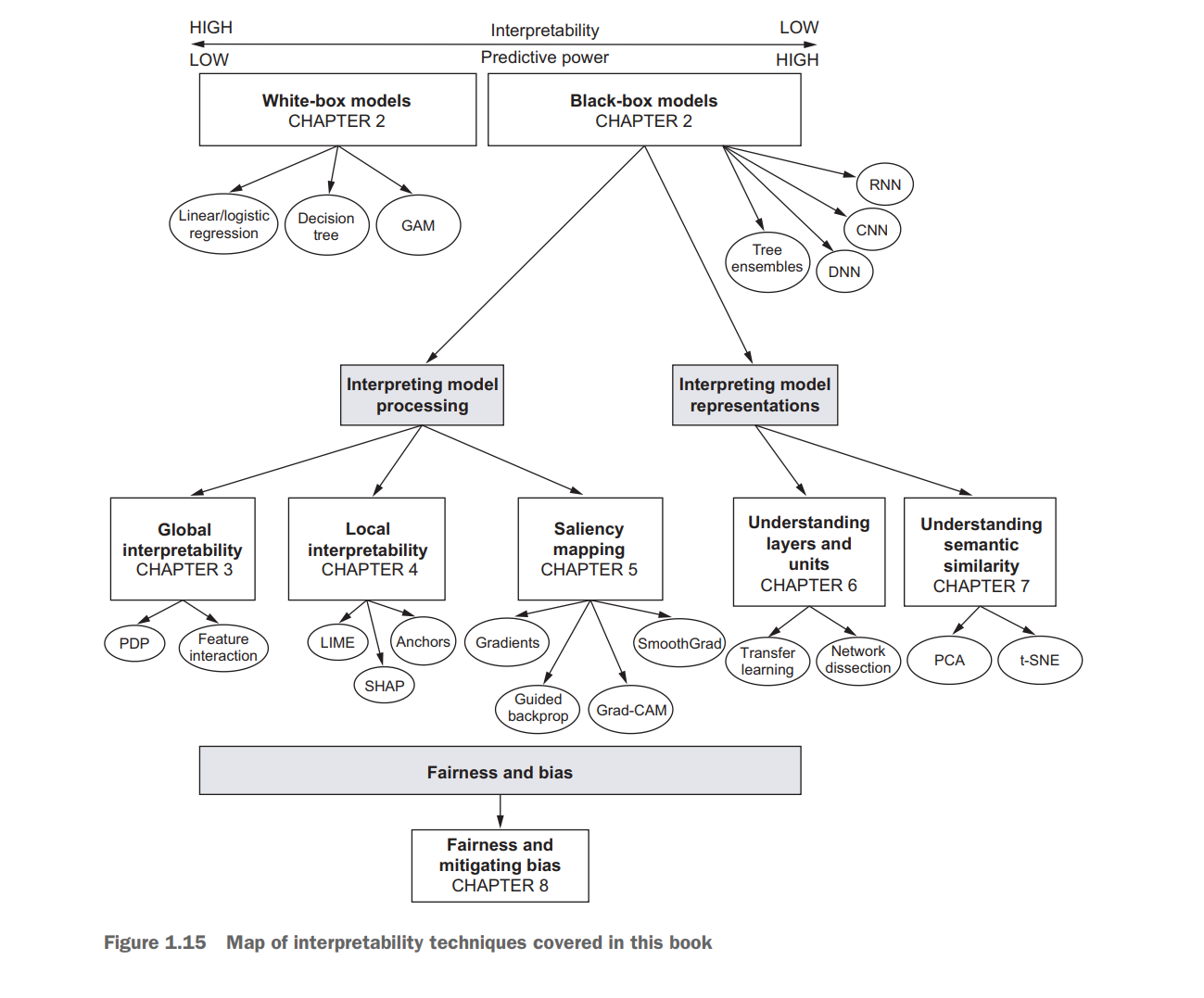

Interpretability is all about understanding the cause and effect within an AI system. It is the degree to which we can consistently estimate what a model will predict given an input, understand how the model came up with the prediction, understand how the prediction changes with changes in the input or algorithmic parameters, and finally, understand when the model has made a mistake.

Explainability, on the other hand, goes beyond interpretability in that it helps us understand in a human-readable form how and why a model came up with a prediction. It explains the internal mechanics of the system in human terms, with the intent to reach a much wider audience. Explainability requires interpretability as a building block and also looks to other fields and areas, such as human-computer interaction (HCI), law, and ethics.

决策树:容易overfitting

GAM: y = w0 + f1(x1) + f2(x2) +…+ fn(xn)

Bagging: 多个决策树平行训练,减少过拟合的影响

Boosting: 从第一棵决策树的错误中学习,提升性能